Our .NET OpenAI library is designed for easy integration with the OpenAI API using C#. As a community-maintained library, we ensure it's kept up-to-date and fully tested, supporting .NET Core 6.0 and above.

With an intuitive API, our library simplifies the process of working with OpenAI's powerful natural language processing tools. Plus, we handle HTTP client usage to prevent socket exhaustion and DNS update failures, ensuring a smooth and reliable experience for developers. Our library also supports the OpenAI streaming API, allowing developers to process and analyze large volumes of data in real-time.

Youtube tutorial : Creating your own ChatGPT clone with .NET: A Step-by-Step Guide

Install package Nuget package

Install-Package OpenAI.Net.ClientRegister services using the provided extension methods

services.AddOpenAIServices(options => {

options.ApiKey = builder.Configuration["OpenAI:ApiKey"];

});N.B We recommend using environment variables, configuration files or secret file for storing the API key securely. See here for further details.

You can view examples of a console, web application and Blazor app using the streaming API here.

You can also have a look at the Integration Tests for usage examples here.

Simple console app usage below.

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Hosting;

using OpenAI.Net;

namespace ConsoleApp

{

internal class Program

{

static async void Main(string[] args)

{

using var host = Host.CreateDefaultBuilder(args)

.ConfigureServices((builder, services) =>

{

services.AddOpenAIServices(options => {

options.ApiKey = builder.Configuration["OpenAI:ApiKey"];

});

})

.Build();

var openAi = host.Services.GetService<IOpenAIService>();

var response = await openAi.TextCompletion.Get("How long until we reach mars?");

if (response.IsSuccess)

{

foreach(var result in response.Result.Choices)

{

Console.WriteLine(result.Text);

}

}

else

{

Console.WriteLine($"{response.ErrorMessage}");

}

}

}

}The registration extension allows for configuration of the http client via the IHttpClientBuilder interface. This allows for adding a Polly retry policy for example. See example here.

services.AddOpenAIServices(options => {

options.ApiKey = builder.Configuration["OpenAI:ApiKey"];

},

(httpClientOptions) => {

httpClientOptions.AddPolicyHandler(GetRetryPolicy());

});

static IAsyncPolicy<HttpResponseMessage> GetRetryPolicy()

{

return HttpPolicyExtensions

.HandleTransientHttpError()

.WaitAndRetryAsync(3, retryAttempt => TimeSpan.FromSeconds(Math.Pow(2,

retryAttempt)));

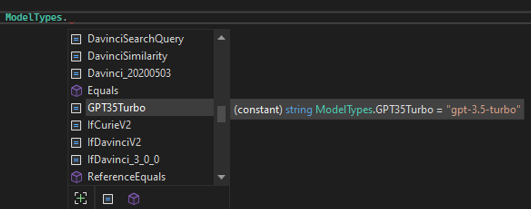

}Models type strings can be found in ModelTypes class.

ModelTypes.GPT35Turbovar response = await service.TextCompletion.Get("Say this is a test",(o) => {

o.MaxTokens = 1024;

o.BestOf = 2;

});await foreach(var response in service.TextCompletion.GetStream("Say this is a test"))

{

Console.WriteLine(response?.Result?.Choices[0].Text);

}var prompts = new List<string>()

{

"Say this is a test",

"Say this is not a test"

};

var response = await service.TextCompletion.Get(prompts,(o) => {

o.MaxTokens = 1024;

o.BestOf = 2;

});var messages = new List<Message>

{

Message.Create(ChatRoleType.System, "You are a helpful assistant."),

Message.Create(ChatRoleType.User, "Who won the world series in 2020?"),

Message.Create(ChatRoleType.Assistant, "The Los Angeles Dodgers won the World Series in 2020."),

Message.Create(ChatRoleType.User, "Where was it played?")

};

var response = await service.Chat.Get(messages,o => {

o.MaxTokens = 1000;

});var messages = new List<Message>

{

Message.Create(ChatRoleType.System, "You are a helpful assistant."),

Message.Create(ChatRoleType.User, "Who won the world series in 2020?"),

Message.Create(ChatRoleType.Assistant, "The Los Angeles Dodgers won the World Series in 2020."),

Message.Create(ChatRoleType.User, "Where was it played?")

};

await foreach (var t in service.Chat.GetStream(messages))

{

Console.WriteLine(t?.Result?.Choices[0].Delta?.Content);

}var response = await OpenAIService.Audio.GetTranscription(@"Audio\TestTranscription.m4a");var response = await OpenAIService.Audio.GetTranslation(@"Audio\Translation.m4a");var messages = new List<Message>

{

Message.Create(ChatRoleType.System, "You are a spell checker. Fix the spelling mistakes"),

Message.Create(ChatRoleType.User, "What day of the wek is it?"),

};

var response = await OpenAIService.Chat.Get(messages, o => {

o.MaxTokens = 1000;

});var response = await service.Images.Edit("A cute baby sea otter", @"Images\BabyCat.png", @"Images\Mask.png", o => {

o.N = 99;

});var response = await service.Images.Edit("A cute baby sea otter",File.ReadAllBytes(@"Images\BabyCat.png"), File.ReadAllBytes(@"Images\BabyCat.png"), o => {

o.N = 99;

});var response = await service.Images.Generate("A cute baby sea otter",2, "1024x1024");var response = await service.Images.Variation(@"Images\BabyCat.png", o => {

o.N = 2;

o.Size = "1024x1024";

}); var response = await service.Images.Variation(File.ReadAllBytes(@"Images\BabyCat.png"), o => {

o.N = 2;

o.Size = "1024x1024";

});var response = await service.FineTune.Create("myfile.jsonl", o => {

o.BatchSize = 1;

});var response = await service.FineTune.Get();var response = await service.FineTune.Get("fineTuneId");var response = await service.FineTune.GetEvents("fineTuneId");var response = await service.FineTune.Delete("modelId");var response = await service.FineTune.Cancel("fineTuneId");var response = await service.Files.Upload(@"Images\BabyCat.png");var response = await service.Files.Upload(bytes, "mymodel.jsonl"); var response = await service.Files.GetContent("fileId");var response = await service.Files.Get("fileId");var response = await service.Files.Get();var response = await service.Files.Delete("1");var response = await service.Embeddings.Create("The food was delicious and the waiter...", "text-embedding-ada-002", "test");var response = await service.Models.Get();var response = await service.Models.Get("babbage");var response = await service.Moderation.Create("input text", "test");This project has 100% code coverage with Unit tests and 100% pass rate with Stryker mutation testing.

See latest Stryker report here.

We also have Integration tests foreach service.

This should provide confidence in the library going forwards.

Contributions are welcome.

Minimum requirements for any PR's.

-

MUST include Unit tests and maintain 100% coverage.

-

MUST pass Stryker mutation testing with 100%

-

SHOULD have integration tests