This is an official implementation of our BMVC 2020 paper RODEO: Replay for Online Object Detection. The arxiv link of the paper is available at https://arxiv.org/abs/2008.06439.

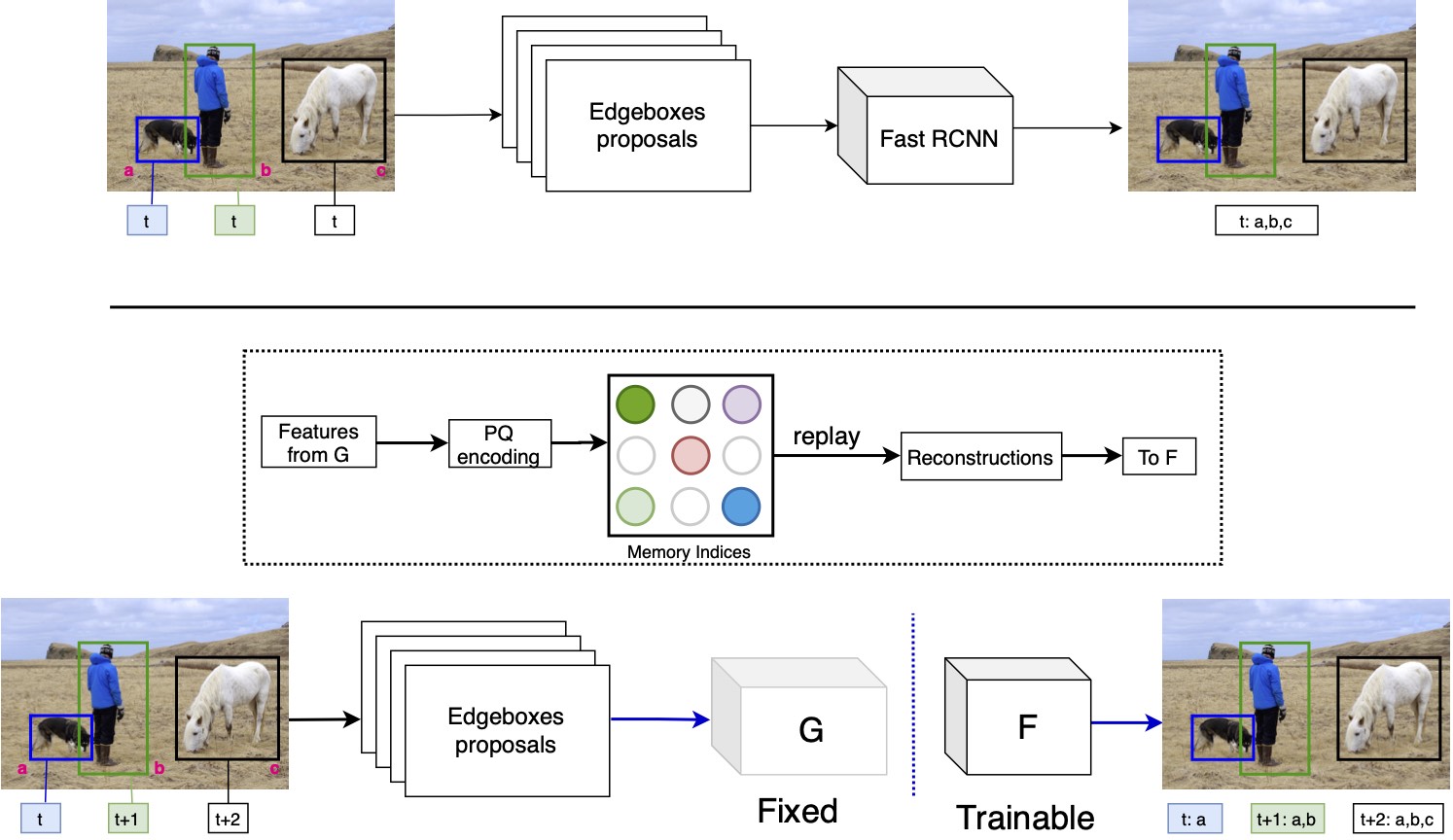

Humans can incrementally learn to do new visual detection tasks, which is a huge challenge for today's computer vision systems. Incrementally trained deep learning models lack backwards transfer to previously seen classes and suffer from a phenomenon known as catastrophic forgetting. In this paper, we pioneer online streaming learning for object detection, where an agent must learn examples one at a time with severe memory and computational constraints. In object detection, a system must output all bounding boxes for an image with the correct label. Unlike earlier work, the system described in this paper can learn how to do this task in an online manner with new classes being introduced over time. We achieve this capability by using a novel memory replay mechanism that replays entire scenes in an efficient manner. We achieve state-of-the-art results on both the PASCAL VOC 2007 and MS COCO datasets.

We recommend setting up a conda environment with the envrionment file in this repo.

conda env create --file envname.yml

To setup the dataset and evaluation donwload COCO API as suggested in pytorch object detection tuturial. and this colab notebook.

To setup pycocotools run the script download.sh

We use pre-calcuated Edgebox proposals to train our Fast-RCNN models.

For both MSCOCO and the VOC datasets, the half checkpoint and Edgebox proposals are located in this google drive folder.

- To train the models for base init classes run

train_bettercoco.pyortrain_betterfor VOC. This outputs the half checkpoint that can be used to incremental training. - To extract features run

extract_coco_features.pyorextract_voc_features.pyfile which will output features to h5 files. - To get the PQ reconstrcuted features run

pq_encoding_voc.pyorpq_encoding_coco.py. - Run

runcoco.shto train offline and other baseline models. - Run

runreplay.shto run RODEO model with replay sizes as a hyper-parameter.

One of the comparison method (ILWFOD) discussed is proposed in ICCV 2017 paper "Incremental Learning of Object Detectors without Catastrophic Forgetting". Their code can be found in this repo https://github.com/kshmelkov/incremental_detectors.

If using this code, please cite our paper.

@inproceedings{acharya2020rodeo,

title={RODEO: Replay for Online Object Detection},

author={Acharya, Manoj and Hayes, Tyler L. and Kanan, Christopher},

booktitle={The British Machine Vision Conference},

year={2020}

}